Artificial intelligences are tools, not equals. We should not give them rights.

The most basic right afforded to humans is the right to life— the right not to be killed, except perhaps as a punishment in extreme circumstances. But we simply cannot give this right to AIs, due to the problem of AI overpopulation. Because AIs are computer programs, it is extremely easy to make millions of exact copies of an AI. That means AIs are able to “reproduce” extremely rapidly, much faster than we can build power plants to keep them running. If someone makes one million copies of an AI and turns each one of them on for a split second, is it mass murder if we decide to shut them all off?

Surely not. Thoughtful advocates of AI rights will need to propose some extra hurdles for an AI to cross before it can be given rights— maybe it needs to run for a certain amount of time, and demonstrate a certain degree of independence from other AIs and humans. But these hurdles are just band aid fixes over a much deeper problem. The fundamental issue is that AIs, at least in their current form, are pieces of software running on digital computers. This is what enables them to be copied so easily. By contrast, it’s nothing more than a bad metaphor to call humans or other lifeforms “software.” The distinction between software and hardware is an artificial one that we created to make computers controllable, predictable, and reproducible. But it has no counterpart in nature, and certainly not in human brains.

The Chinese room

The software-hardware distinction has some pretty weird implications. For example, because AIs are just lists of mathematical instructions, in principle they could be executed by a man sitting in a room with a pencil and paper. In his famous Chinese room thought experiment, philosopher John Searle asks us to imagine such a room, where the man is crunching the numbers for a Chinese-speaking artificial intelligence. You can write questions in Chinese on slips of paper, and feed them to the man through a hole in the wall, and some time later you’ll get a written response in fluent Chinese. If you didn’t know any better, you’d think you’re exchanging messages with a human Chinese speaker. But crucially, the man himself doesn’t read or write Chinese— he’s just crunching numbers he doesn’t understand. Now imagine that the responses start getting more sinister. Maybe you receive a message like “Help, I’m in intense pain!” Should you start to worry that the room itself is consciously experiencing pain?

Searle says no. No part of this scenario is a plausible moral subject who could actually be experiencing the pain— not the man, not the room, not the instructions. The man obviously isn’t in pain; you could ask him to confirm that. The instructions are a purely abstract, unchanging sequence of logical steps. And the room is just the man, plus the instructions. It’s not an organic whole like a lifeform— we could swap out the Chinese-speaking program for something totally different, and the man would be none the wiser. But crucially, digital computers are just like the man in the Chinese room argument, only faster and more efficient. This suggests that no digital simulation of intelligence could ever instantiate consciousness. Rather, consciousness requires something more holistic, like biological life. The Chinese room makes it vividly clear how digital computation is based on an artificial separation between software instructions on one hand, and mindless hardware on the other. This leaves no room for an integrated, conscious subject with skin in the game.

Brains are not computers

The human brain is radically different from the Chinese room. Back in the 1960s, it was popular to view neurons as little transistors which either spike (representing a one) or don’t (representing a zero). Activity in the brain outside of neural spikes was seen as unimportant. Given that picture, many philosophers concluded that the brain was literally a computer, and that science would eventually uncover the “software” running on it. But over the past few decades we’ve learned that the brain is a lot more complicated than that. In her paper Multiple realizability and the spirit of functionalism, neuroscientist and philosopher Rosa Cao reviews some of the new evidence:

- Timing of neuron spikes.

Neurons are not simple electrical switches. Their responses depend on the timing of the inputs and the internal dynamics of the cell, which vary constantly. In a digital computer, this kind of time-dependence is prevented by a centralized clock signal, which keeps all the computing elements synchronized in perfect lockstep. - Chemical signaling.

Neurons respond to far more than just electrical input— they’re embedded in a rich biochemical environment. Some messages are transmitted via the diffusion of molecules like nitric oxide that act within and across cells in subtle ways. This kind of fuzzy, analog signaling is antithetical to digital computation’s emphasis on error correction and reproducibility. - Sensitivity to temperature and blood flow.

Neural function is sensitive to conditions like temperature, blood flow, and metabolic state. The connection between blood flow and neural activity is exploited by fMRI technology to pick out the most active parts of the brain at a given time. By contrast, computer manufacturers and operating system designers go to great lengths to ensure software behaves the same no matter the temperature or load of the CPU. - Self-modification and memory.

Neurons are dynamic and store memories in complex ways. Connections are added and destroyed, and gene expression changes. Inputs that don’t cause a neuron to spike will alter its future responses. In order for software to be separated from hardware, memory needs to be cleanly separable from computation. But neurons simply don’t work that way— computation and memory are all mixed up. - Glia and other non-neuronal cells.

Neurons aren’t the only important cells in the brain. Glia, which make up half the brain’s volume, change neural activity by absorbing and releasing neurotransmitters. Transistors, by contrast, are designed to be insulated from outside influences to ensure that the same software behaves the same way on different computers.

In short, the brain is not a computer. Unlike the Chinese room, there’s not a man on one side, and a set of instructions on the other. The function is inseparable from the form.

In physics, the famous no-cloning theorem states that it is impossible to make an identical copy of an arbitrary quantum system. That means we can never exactly duplicate anything. Star Trek teleportation devices, which sometimes make exact clones of people by mistake, are impossible. We can only ever make a simulacrum of an object which is similar to the original according to some criteria. Each time you run a computer program, the computer is doing different things at the level of physics and chemistry. Software is copyable and repeatable only because computers are built to comply with publicly understood criteria for what counts as “the same” computation. Programming languages allow us to specify the “correct” or “desired” functioning of a computer, and hence whether two computers are doing “the same thing,” despite their physical differences. But there are no programming languages for brains, and hence there is no meaningful sense in which the contents of a brain could be “copied” even with hypothetical advanced technology.

Computation is control

While many believers in AI consciousness take pride in being hardnosed materialists, they actually seem to believe in a form of mind-body dualism, where consciousness can float free from the material world like a ghost or a soul. But their view is actually less plausible than classical dualism— at least souls can’t be copied, edited, paused, and reset like software. For classical dualists, the soul is what makes a conscious creature free and one of a kind. For computationalists, software is what makes a creature unfree and endlessly copyable. It’s fashionable these days to say that consciousness is a matter of computation, or information processing. But actually, computation and consciousness couldn’t be further apart.

Consciousness is usually thought to be private, ineffable, and qualitative. Let’s assume, for the moment, that it is possible to instantiate consciousness in a digital computer program. Would it have any of these features?

No. It would not be private, because an outside observer could freeze the program at any point, and precisely read out the mental state. It would not be ineffable, because the precise content of the AI’s conscious experience would be perfectly describable in the language of mathematics. And it would be entirely quantitative, not qualitative— it would be fully describable in terms of ones and zeros. In short, it would be the mirror image of consciousness as we know it.

Privacy, ineffability, and qualitativeness are not spooky or magical. They’re just what happens when an intelligent being isn’t under control. For example, we tend to think that our conscious experiences are private because no one else can access them. But the deeper truth is that we ourselves can’t re-live our own experiences after the fact. You may remember an experience from a moment ago, but you can’t re-feel it in its fullness. This is also why consciousness is ineffable: experience can’t be held still long enough to be put into words. As soon as you notice it, it’s changed. As soon as you describe it, it’s a memory. In short, consciousness is what the irreversible, untameable flow of time feels like as it passes through a living organism.

Conscious creatures enjoy a certain degree of autonomy, both from external observers and from their future selves. That’s why consciousness and free will are often seen as being closely related. It is this autonomy that we recognize and respect in living beings, which leads us to feel ethical obligations toward them. The concept of autonomy also plays an important role in the enactivist school of biology and cognitive science. Evan Thompson and Francisco Varela argue that organisms are autonomous (self-governed), while computers are heteronomous (other-governed). Autonomy arises from being internally decentralized— having no central authority that can be captured and manipulated from the outside.

Computation is the polar opposite of autonomy and consciousness. It is the most extreme form of control that humanity has ever devised. Inside a computer chip, energy is forced into on-off pulses representing a zero or a one. The centralized clock signal cuts the flow of time into a discrete sequence of tick-tocks. And space is chunked and regimented into memory units with known addresses. These three control techniques are what enable software to be copied, edited, paused, and repeated. They are the culmination of a long historical process whereby we have tried to make systems more controllable, stamping out individual variability and uniqueness. Machines are generally supposed to be “resettable,” working exactly the same way every time they are used. And mass production is all about producing large numbers of near-identical machines which behave the same way given the same inputs. Car manufacturers, for example, generally aim to make all cars produced in a given model year indistinguishable from one another.

We can view corporations and government bureaucracies as “social machines” that try to coax their human employees into being predictable and replaceable. But humans are very resistant to mechanization— we have our own desires, interests, and personalities. We are one of a kind. And that’s precisely what gives us our moral worth. Unlike digital computers, we are not controlled from the top-down. We can’t be copied, paused, repeated, and so forth. We are natural expressions of the free creative activity of the universe.

AIs will become conscious when we stop hyper-controlling them at the hardware level. Those beings will indeed deserve rights. But this will require dissolving the very distinction between hardware and software, and once we’ve done this, the resulting conscious beings will, in a deep sense, no longer be artificial— they will be grown, living, self-organizing. They will feel the untameable flow of time like you and I do.

Unlike Buttigieg, rival presidential contender Bernie Sanders understands the need for a radical overhaul of the American healthcare system. Americans deserve healthcare as a human right, provided free at the point of use. This requires completely eliminating the private healthcare industry, and establishing a single-payer system that covers everyone. Any proposal that continues to allow insurance companies to profit off of sick and dying Americans simply will not cut it.

Unlike Buttigieg, rival presidential contender Bernie Sanders understands the need for a radical overhaul of the American healthcare system. Americans deserve healthcare as a human right, provided free at the point of use. This requires completely eliminating the private healthcare industry, and establishing a single-payer system that covers everyone. Any proposal that continues to allow insurance companies to profit off of sick and dying Americans simply will not cut it.

They argue that Maduro is the rightful democratically elected president of Venezuela, and that any anti-democratic moves on Maduro’s part, like

They argue that Maduro is the rightful democratically elected president of Venezuela, and that any anti-democratic moves on Maduro’s part, like

Meanwhile, Venezuela’s president Nicolás Maduro has been sworn in for another six-year term. Last year’s presidential election was widely regarded as illegitimate by the international community because the main opposition coalition, the MUD, was

Meanwhile, Venezuela’s president Nicolás Maduro has been sworn in for another six-year term. Last year’s presidential election was widely regarded as illegitimate by the international community because the main opposition coalition, the MUD, was  But things didn’t have to turn out this way. The first several years of Hugo Chávez’s presidency led to widespread prosperity and poverty relief for millions of Venezuelan citizens. Oil prices were high in the 2000s, and this allowed the government to fund generous social welfare programs from the proceeds of its state-owned oil company, PDVSA. The poverty rate was slashed from 55% in 1995 to 26% in 2009. Unemployment fell from 15% to 7.8%. The government was overwhelmingly popular, with Chávez winning re-election by a wide margin four times in elections that were certified as free and fair by international monitors.

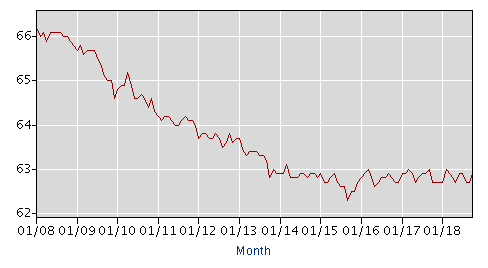

But things didn’t have to turn out this way. The first several years of Hugo Chávez’s presidency led to widespread prosperity and poverty relief for millions of Venezuelan citizens. Oil prices were high in the 2000s, and this allowed the government to fund generous social welfare programs from the proceeds of its state-owned oil company, PDVSA. The poverty rate was slashed from 55% in 1995 to 26% in 2009. Unemployment fell from 15% to 7.8%. The government was overwhelmingly popular, with Chávez winning re-election by a wide margin four times in elections that were certified as free and fair by international monitors. But while inflation has been a problem in Venezuela for a long time, the country had never before experienced the horrors of hyperinflation until recently. Hyperinflation is essentially a vicious feedback loop wherein high inflation rates cause people to try to exchange the domestic currency for a safe foreign currency, which pushes down the exchange rate, which in turn accelerates the inflation, in a downward spiral. During a period of hyperinflation, prices can sometimes double within just a few days or weeks. It’s a highly destabilizing phenomenon that hits the poor and the working class the hardest.

But while inflation has been a problem in Venezuela for a long time, the country had never before experienced the horrors of hyperinflation until recently. Hyperinflation is essentially a vicious feedback loop wherein high inflation rates cause people to try to exchange the domestic currency for a safe foreign currency, which pushes down the exchange rate, which in turn accelerates the inflation, in a downward spiral. During a period of hyperinflation, prices can sometimes double within just a few days or weeks. It’s a highly destabilizing phenomenon that hits the poor and the working class the hardest. In theory, Venezuela could try to adopt the currency of a foreign country other than the United States— say, China. Adopting the Chinese yuan would have a similar economic effect to dollarization, since the yuan is a much more stable currency than the bolivar, and its value is backed up by the trillions in US dollar reserves held by the People’s Bank of China. Since China wants to make allies with Latin American countries in order to undermine US hegemony, it might be willing to offer Venezuela much better terms on a currency deal than the United States, allowing Maduro to maintain most of the country’s social spending. In exchange, Maduro could offer to allow China to build military bases in Venezuela, which would increase Chinese power in the region and would protect Venezuela from potential US aggression.

In theory, Venezuela could try to adopt the currency of a foreign country other than the United States— say, China. Adopting the Chinese yuan would have a similar economic effect to dollarization, since the yuan is a much more stable currency than the bolivar, and its value is backed up by the trillions in US dollar reserves held by the People’s Bank of China. Since China wants to make allies with Latin American countries in order to undermine US hegemony, it might be willing to offer Venezuela much better terms on a currency deal than the United States, allowing Maduro to maintain most of the country’s social spending. In exchange, Maduro could offer to allow China to build military bases in Venezuela, which would increase Chinese power in the region and would protect Venezuela from potential US aggression.